Anne Bergen (https://knowledgetoaction.ca/) is a community based evaluator and an alum from Research Impact Canada when she worked at RIC member, University of Guelph. She helped the RIC Evaluation Committee develop a logic model and evaluation framework. She describes her work in a case study that is introduced below.

Do you want to evaluate a networked knowledge mobilization project or initiative? To articulate and assess how partners’ work is contributing to a common goal ? And to have partners in the collaboration feel like the evaluation represents them so that multiple organizations “buy-in” to the evaluation activities and approaches?

You can achieve this if you start with collaborative and iterative logic model development, and use that logic model as a decision aid to complete the rest of the evaluation framework (e.g., evaluation goals, measurement approaches).

Key Messages of this case study

- Work together in a small group to create a collaborative and iterative logic model and evaluation framework, where the group represents the diverse perspectives of a partnership initiative.

- Create a logic model that maps out target audiences, common activities, what success looks like (outputs, outcomes, impacts), and enabling conditions.

- Then use that logic model to guide decisions around what and when to measure. Instead of trying to measure everything, focus what’s important and what’s feasible.

Summary

This brief case study example describes a project working with Research Impact Canada (RIC) to create an evaluation framework, beginning with co-creation of a logic model.

Research Impact Canada (RIC) is a network of 16 universities dedicated to using knowledge mobilization to create research impact for the public good. In this project, a small group (the RIC evaluation committee) collaborated through four online workshops to define an evaluation framework that represented the shared knowledge mobilization activities of the larger collaboration, and aligned the framework with goals from RIC’s strategic plan.

This work expanded RIC’s past evaluation approach that focused on monitoring through counting outputs (the products of RIC activities). The current framework explains how activities link to short-term outcomes of improved knowledge, skills, and attitudes, which contribute to longer-term changes of improved individual practice, organizational capacity, and systems support.

About the Research Impact Canada Evaluation Framework Project

Within the RIC, network members all have a different knowledge mobilization approach, portfolio, and capacity. For example, some partners have a dedicated unit for knowledge mobilization across campus with multiple staff, while others focus their work on a faculty or college of larger institution, or function as a semi-autonomous centre embedded in the local community. Similarly, RIC member institutions all have individual plans to track their knowledge mobilization work.

RIC had a strong strategic plan, and a clear vision/mission/mandate, but had not yet developed a framework to evaluate outcomes and contributions to downstream impacts. RIC needed a shared framework for understanding and evaluating its common activities and outcomes to understand the collaborations impact, while representing the diversity of network partners. Creating a refreshed evaluation plan, starting with a collaboratively-defined logic model, allowed RIC to articulate specific shared activities, outcomes, and enabling conditions for success.

In 2017-18, RIC updated its evaluation approach, with work led by the evaluation committee (representing six member institutions), and evaluation guidance and facilitation by Knowledge to Action Consulting.

Project Methods: How We Worked

Small Group Representing Perspectives of Larger Network

When developing the RIC evaluation framework, it was important to draw from the perspectives of members within RIC, while keeping the group small enough for collaborative work.

The “RIC expertise” for building the evaluation framework came from the RIC Evaluation Committee, representing six universities from across Canada:

- Amy Jones, Memorial University

- David Phipps, York University

- Jen Kyffin University of Victoria

- Lisa Erickson, University of Saskatchewan

- Marcelo Bravo, The University of British Columbia

- Rebecca Moore, University of Guelph

This small (yet representative) group format allowed us to discuss and brain storm, and hear everyone’s point of view, and represent the diversity of the RIC network. The RIC evaluation committee reported to the RIC governance committee, for review and approval of draft approach and final products.

Short Project With Regular Meetings for Iterative Work

Together, we build the logic model and evaluation framework over five months (from Dec 2017- April 2018). The group work happened over four two-hour online meetings, in addition to review of the final framework by email.

Four meetings (start-up, logic model development, review logic model & prioritize what to measure, created draft evaluation indicators/tools/approach + implementation plan).

The group used a series of “shared screen” online meetings, so that everyone could see the meeting notes or the document being edited in real-time. Draft documents were also shared by email between meetings.

Created Logic Model as Cornerstone of Evaluation Framework

The key piece of work was collaboratively creating a logic model to articulate how RIC’s activities, both within the network and within partner institutions, create specific outcomes that contribute to an ultimate goal. The logic model also describes key audiences of RIC’s work, and enabling conditions for success. By gathering collaborating partners and working together to create the logic model, RIC partners gained a better understanding of the unique circumstances at individual partner institutions, and were able to identify the overlapping commonalities in their knowledge mobilization work.

The RIC logic model provides a framework for deciding what is important to monitor and measure – from process quality, to outputs, to outcomes. Having this framework in place allows RIC to define their evaluation goals and redefine them as needs and resources change. The framework supports RIC in identifying monitoring and measurement tools and approaches. RIC partners are also able to use the logic model to identify knowledge mobilization evaluation priorities at individual member institutions, or to figure out how to roll up current evaluation with the RIC priorities.

Logic Model Reflection Questions

Audiences

Who are the internal and external audiences (stakeholders/ partners/ end users) of Research Impact?

Activities

What are the activities of the Research Impact on your campus? What are the activities of the Research Impact within the broader network? What activities are aimed at internal vs. external audiences? How do you coordinate with other programs and systems on campus and within Research Impact?

Outcomes and impacts

What do you expect to happen as a result of Research Impact’s activities? What does success look like in the shorter-term? In the longer-term? What might be the changes in knowledge, attitudes, skills, and actions for your different audiences? What are the downstream organizational and systems level impacts?

Enabling conditions and assumptions

What are the enabling conditions of the program (e.g., resources, approach to service delivery)? What needs to be in place for the program to have impact (for activities to lead to outcomes)? Are there contextual or external factors that need to be considered on your campus/ in your community?

Start With Personal Reflection Questions

Logic model development started with a series of questions shared by email before the first logic model meeting, so that members could reflect from their own perspective on Research Impact’s audiences, activities, outcomes, impacts, and enabling conditions.

Create Mind Map Outline of Audiences, Activities, Outcomes, and Enabling Conditions

Create Mind Map Outline of Audiences, Activities, Outcomes, and Enabling Conditions

Together, the group mapped the activities, outcomes, impacts, and enabling conditions for RIC in mind map. This format is helpful for early logic model development, as it allows themes to be clustered and re-clustered as ideas emerge and evolve among the group.

Distill a Clean One Page Version of the Logic Model

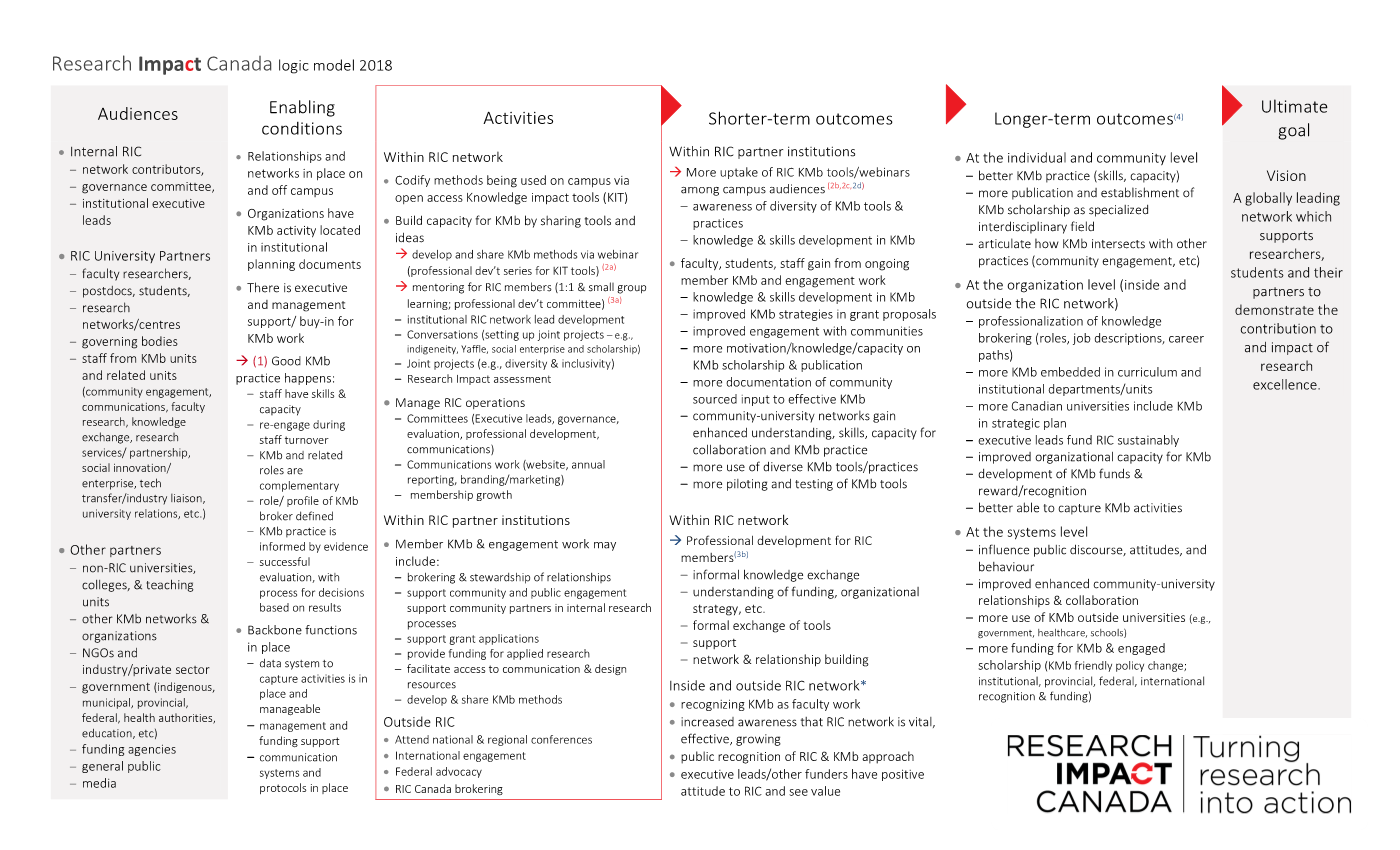

The column-based layout for RIC’s logic model is based on the University of Wisconsin-Madison’s (2009) template, as adapted by the Canadian Water Network (2015).

Changes to the template include removing the input and output columns. Instead, the activities column describes the types of outputs created; rather than tracking specific inputs, RIC described the various enabling conditions that would support activities contributing to outcomes and impacts.

Figure 2 RIC Logic Model (2018)

Figure 2 RIC Logic Model (2018)

Project Results: What We Created

The Research Impact Canada Logic Model

The logic model was designed to be general enough to work across very different institutions, and to make explicit and align RIC partners’ understanding of the network collaboration.

The RIC logic model identified activities and outcomes by layers of audiences and end-users for RIC knowledge mobilization work. These audiences are both internal to the RIC network (e.g., network contributors, governance), part of the partner institution (e.g., faculty researchers, staff, students), as well as other external partners (e.g., funding agencies, non-RIC universities and colleges).

The enabling conditions explain what needs to be in place for RIC’s activities to create the desired outcomes that will contribute to impacts and ultimate goals.

RIC Example: Together with having the right relationships, buy-in, and backbone functions, a key assumption is that “good knowledge mobilization (KMb) practice happens” such that staff have the capacity to engage in skillful and evidence-informed KMb practice.

Activities are broken out within the RIC network and within RIC partner institutions, and outside of RIC.

RIC Example: Within the network, partners codify successful KMb methods being used on campus, build capacity for KMb, and manage RIC operations. Within RIC partner institutions, work differs depending on the location, and may include brokering, community engagement, grant application support, and sharing KMb methods. Outside of RIC, members attend conferences and engage nationally and internationally.

Shorter-term outcomes are the change in knowledge, awareness, attitudes, and motivation that contribute to later changes in individual practice, organizational policy and capacity, and broader systems. Short-term outcomes are broken out within RIC partner institutions, within the RIC network, and at the level of systems inside/outside the network.

RIC Example: Within RIC partner institutions, the key short-term outcome is more uptake of RIC KMb tools/webinars among campus audiences, and that faculty, students, staff gain from ongoing member KMb and engagement work. Within RIC network, the short-term outcome is professional development for RIC members, gaining knowledge and support networks. In the broader context (both inside and outside RIC network), the key outcome is seeing knowledge mobilization as a core part of faculty researcher work, and awareness of RIC as a vital and effective network.

Longer-term outcomes are the changes in behaviour, policy, and practice that are the logical next step after the short-term outcomes.

RIC Example: At the individual and community level, the key long-term outcome is more better knowledge mobilization practice and scholarship. At the organization level (inside and outside the RIC network), the key longer-term outcome goal is professionalization of knowledge brokering and embedding of knowledge mobilization in organizational strategic plans and curriculum, as well as development of funding and rewards for KMb work. At the systems level, outcome goals relate to systems reward, recognition , and funding for knowledge mobilization, improved community-university relations, and enhanced use of knowledge mobilization outside of universities.

The ultimate goal is the desired end-point of the RIC activities. Ultimate goals are typically aspirational change statement, and as such, should align with an organization or initiative’s vision, mission, and/or mandate.

RIC Example: The ultimate goal is RIC’s vision: A globally leading network which supports researchers, students and their partners to demonstrate the contribution to and impact of research excellence.

RIC Evaluation Goals, Measurement Priorities, and Methods

The RIC group used their newly updated logic model to guide development of the larger evaluation framework.

The RIC logic model provides a comprehensive look at the network’s audiences, activities, outcomes, and impacts, and enabling conditions. Having a current understanding of what RIC is doing, with whom, and with what expected result, makes it easier to determine what RIC wants to understand through evaluation (goals) and what/when/how to measure (priorities and methods).

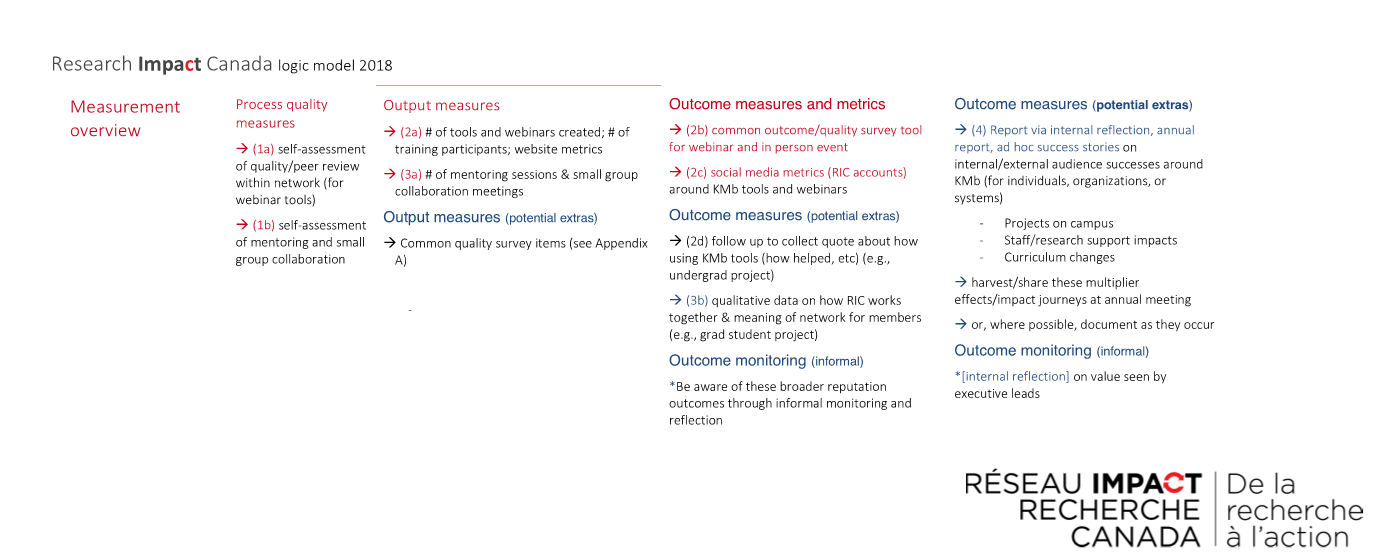

In particular, RIC used the logic model to identify what was (1) important to evaluate, (2) feasible to evaluate, and to develop indicators. Figure 2 shows the overview of RIC’s measurement priorities and indicators.

Figure 3 RIC Evaluation – Measurement Overview

Figure 3 RIC Evaluation – Measurement Overview

Don’t Measure Everything: Identify Evaluation Goals and Approaches

While the entire logic model is large and complex, it’s not necessary (or expected) to measure everything on the logic model at the same time. RIC focused on things to measure that were within their scope of control.

The RIC group prioritized where to focus evaluation measurement, based on the options in the logic model, and what was within their scope of control. As shown in the Measurement Overview in Figure 2, RIC decided to track short-term outcomes from activities that were most closely linked to the RIC network, as opposed to individual partner operations. The measurement priorities included assessing changes in knowledge, awareness, and capacity from campus KMb events and mentoring in the network.

Develop Feasible Evaluation Methods and Tools

RIC developed tools to assess the key outcomes and outputs of interest, as well as indicators of the quality of their work. The evaluation had to be feasible: within the resources available to the RIC network, and the varying resources and capacity within different member institutions. Therefore, as shown in Figure 2, the group decided to focus on using post-event surveys to assess short-term outcomes related to changes in knowledge, attitudes, and capacity, as well as the quality of training offered. To assess the network mentoring and collaboration, RIC plans to use regular reflection, group discussion, and tracking engagement.

Attaining short-term outcome goals by improving knowledge and capacity is expected to contribute to improved KMb practice and professionalization, therefore to amplified research impact. When resources permit (e.g., via summer graduate research assistantships), RIC will use more intensive qualitative inquiry to understand how and where KMb training and capacity building contributes to longer-term outcomes and impacts

Implementation and Next Steps

Since April 2018, the framework has been implemented at RIC partner institutions. RIC has a graduate student developing an operational plan to capture qualitative data around longer-term outcomes. RIC is also adapting the tracking tools to better meet their reporting needs.

Overall, by working together to capture multiple perspectives, RIC created a refreshed and manageable framework to evaluate the quality and outcomes of its network activities. RIC’s logic model articulates how attaining short-term goals is expected to contribute to longer-term outcomes, and broader research impacts, and how network activities build towards to a larger common goal. The evaluation evidence that RIC plans to collect will help the network understand what is changing as a result of RIC activities, and what can be improved.

The takeaway message from this example is that taking the time to collaboratively define a logic model, and using that logic model to guide evaluation decisions, yields a strong evaluation framework that provides benefit to the network and to partner organizations.